Blog: Legal and Policy Frameworks as Enablers of Artificial Intelligence in Public Transport

The potential of artificial intelligence (AI) as a tool to reshape urban mobility patterns has generated considerable optimism across many global industries- public transport being no exception.

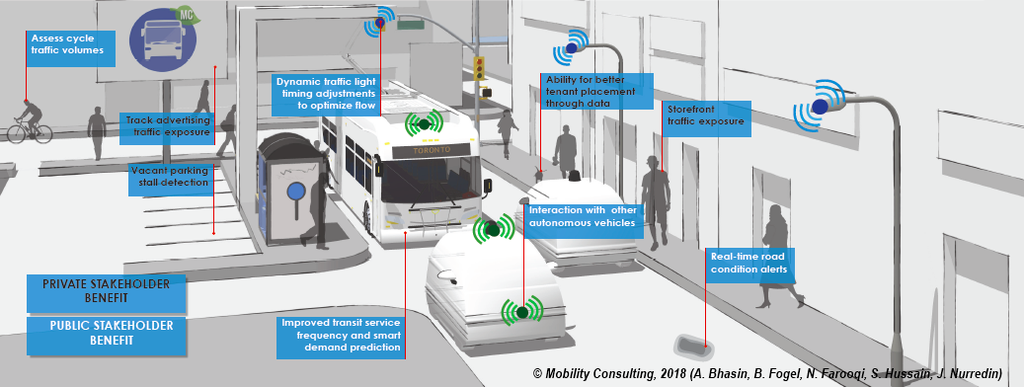

Many argue that AI can enhance rider experiences by facilitating frictionless, seamless mobility in a way that meets currently unmet rider needs.[1]This is in addition to the potential of AI to cost-effectively address several systemic challenges facing public transport globally, including the first-and-last mile paradigm, high service quality and reliability, and coordination of evidence-based decision making using high-quality data sets. On one extreme, AI is envisioned to facilitate the transition towards greater automation of public transit vehicles, yielding many operational changes that parallel the movement towards autonomous private vehicles and Mobility as a Service platforms which are largely regarded as a way to reduce costs and free up time for drivers and riders alike.

In many ways public transport agencies have begun to embrace AI, such as piloting autonomous public shuttles and buses,[2]integrating Information Technology Services into operation practices, and partnering to deploy Mobility as a Service platforms. Notwithstanding this optimism, private mobility platforms, such as ride-hailing companies, are increasingly viewed as a growing threat to the ongoing relevance of public transport, especially as these companies continue to invest millions of dollars into AI platforms that respond to rider mobility needs and reduce driver costs, such as the development of self-driving vehicles. To remain a viable component of future urban mobility patterns, public transport needs to explore the opportunities AI presents.

Adopting any AI tool requires a strong business case. The success of such a business case is contingent upon an environment that enables and complement proposed changes. The legal and policy environment surrounding AI in public transport is one such enabler which, if properly crafted, can proactively address the breath of legal challenges posed by AI. For public transport agencies, actively considering the legal and policy issues of AI can set in place a framework to test, manage, and benchmark integration challenges against the proposed benefits of a particular AI tool.

To develop a legal and policy framework, public transport agencies need to carefully consider at least three key questions: i) what is the nature of the AI tool to be deployed, and whether that tool can be characterized as a product or service, ii) what is the purpose of that tool, namely as an instrument, agent or autonomous person, and iii) who has control over the AI tool.[3]Once these questions are considered, the breath of legal risks of adopting such AI can be considered. This sets the foundation for drafting a framework that mitigates potential legal risks. These legal risks largely relate todata usage, accountability and scalability.

Data Usage

AI involves the use of data to transform inputs into outputs, in which the greater degree of AI-based automation requires large quantities of data to process, verify, and reinforce conclusions.[4] Data intersects at least three main areas of law. The first is privacy lawwhich pervades the entire data value chain, beginning from how and where data is collected, whether confidential data is collected and anonymized, its intended use, if and how consent is obtained, who has access and stores data, and where and how data is stored. Different jurisdictions, standards, and sources of data increase the complexity of managing data privacy risks for public transport agencies. Establishing a data governance framework can assist in mitigating these legal issues while also offering public transport agencies a foundation when engaging in discussions about data access between private platforms. [5] The second area relates to protecting data from cyber-attacks and data leaks. public transport can be viewed as stewards of data, especially due to the high level of trust riders generally place in public transport agencies. Finally, there are several intellectual property dimensions related to AI, as an AI program or product may be patentable, subject to onerous licensing regimes, or rely upon data subject to copyright protection.

Accountability

Assigning responsibility should an AI tool or system fail to achieve intended uses is an area where contract and tort laws interact to create considerable uncertainty if legal risks are not proactively addressed. For example, public transport agencies should consider who is responsible for a breach of contract caused by an AI system or tool, and what risk mitigation strategies should be undertaken, whether by contract or through additional insurance.[6]This may result in active negotiation of contractual warranties, indemnities and limitation of liability terms when purchasing or leasing of an AI system. As well, if AI tools or systems are agents for providers of public transport in order to facilitate contracts with riders or other third parties, consideration should be given to the risk that such contract may not be viewed as legally valid.

Public transport agencies may also be exposed to tort liability if harm results from an AI tool or system. Safety standards in public transport are generally much higher than private forms of urban mobility such that establishing a framework to ensure AI tools do not compromise those standards is critical. Based on the broad reach of AI in public transport, laws are still evolving with respect to core tort law questions, such as whether liability arises, if an AI tool or system is an agent for providers of PT, and how causation between harm and the operation of AI systems is defined. In the absence of legal certainty, public transport agencies should assess potential legal risks, such as by analysing the operation of comparable AI systems with a view of understanding the scope of the proposed AI tool or system relative to its intended use, how it could deviate from those intended uses, and the standards for decommissioning it should it fail to meet the needs of stakeholders.[7] This analysis can assist in setting safety thresholds for acceptable AI deployment as well as creating a basis for instructing legal practitioners when crafting disclaimers, disclosures, and terms of use for third parties with a view of minimizing unnecessary tort claims.

Scalability

The deployment of AI tools and systems intersects several laws and policies, such as those related to employment, competition, and urban planning. The deployment of highly automated AI tools or systems can raise legal questions about the scope of existing employment relationships and whether retraining obligations arise in regulated labour environments. From a policy perspective, the successful deployment of automated AI systems is highly contingent upon managing labour and employment relations. As well, AI systems that regulate the flow of data or rider purchasing decisions may interact with legislation that prohibit the unduly lessening of competition or price fixing. Finally, highly automated AI tools are likely to interact with various departments, networks and fixed infrastructure such that a governance framework for urban planning decisions may be needed to facilitate ongoing coordination.

In the absence of a legal and policy framework for AI in public transport, the above issues will be addressed in the context of existing or new legal frameworks that may result in suboptimal outcomes for public transport agencies. For example, relying upon existing frameworks to interpret privacy obligations may mean that legal adjudicators will return to first-order legal principles in order to locate an analogous legal situation. When an AI tool or system is not well understood or sufficiently deployed, it may be difficult for adjudicators to evaluate how AI is similar or dissimilar to a well-defined situation, yielding uncertain legal outcomes for public transport agencies. On the other hand, new legal regimes for AI are often fragmented, involve limited interaction with existing frameworks/jurisdictions, and risk becoming obsolete as automation evolves.[8]As well, legal definitions may not be clear and can rely upon local standards. This yields room for adjudicators to exercise discretion when determining a legal issue. A legal and policy framework can aid adjudicators when arriving at legal conclusions under existing or new legal frameworks.

As public transport agencies are generally subject to higher safety, reliability, and operational standards than private mobility providers, establishing a legal and policy framework is critical to guide the implementation of AI in public transport in a way that upholds the level of trust riders place in their public transport networks. These frameworks should evolve as circumstances change as well as be customized for each public transport agency to reflect diverse training policies, cultural dynamics, and feedback processes. For public transport agencies considering the adoption of AI, whether through limited data analysis tools or long-term machine learning processes, taking a proactive approach to the legal and policy dynamics of AI can turn what is otherwise a daunting maze of legal issues into an enabler for the deployment of AI in public transport.

exclusive resources